The attention of creatives, lawmakers, and technologists is fixated on generative AI. The ability of a computer to turn a line of text into an image, sound, or video is simultaneously exciting and scary. Adobe has introduced its Firefly (beta) engine in an attempt to empower rather than replace creative professionals. The Firefly page reads, “Adobe is committed to developing creative generative AI responsibly, with creators at the center. Our mission is to give creators every advantage — not just creatively, but practically. As Firefly evolves, we will continue to work closely with the creative community to build technology that supports and improves the creative process.”

Not for commercial use

Adobe is just getting started with generative AI tools. The images produced by the Firefly beta are only for non-commercial use, according to the FAQ page. In this article, we’ve used images produced by Firefly when commenting on them (under Fair Use), but not for the header image (just to avoid any problems). One of the goals of Firefly is for creatives to be able to include imagery created with the help of AI while eliminating this kind of second-guessing.

AI tools for still images

Firefly for still images works on the web and in Photoshop. We’re going to focus on the web version. Adobe allows you to upload your own images to the site or use some of their sample images. Adobe claims that all of the images that Adobe uses to train its AI have been appropriately licensed.

In-painting

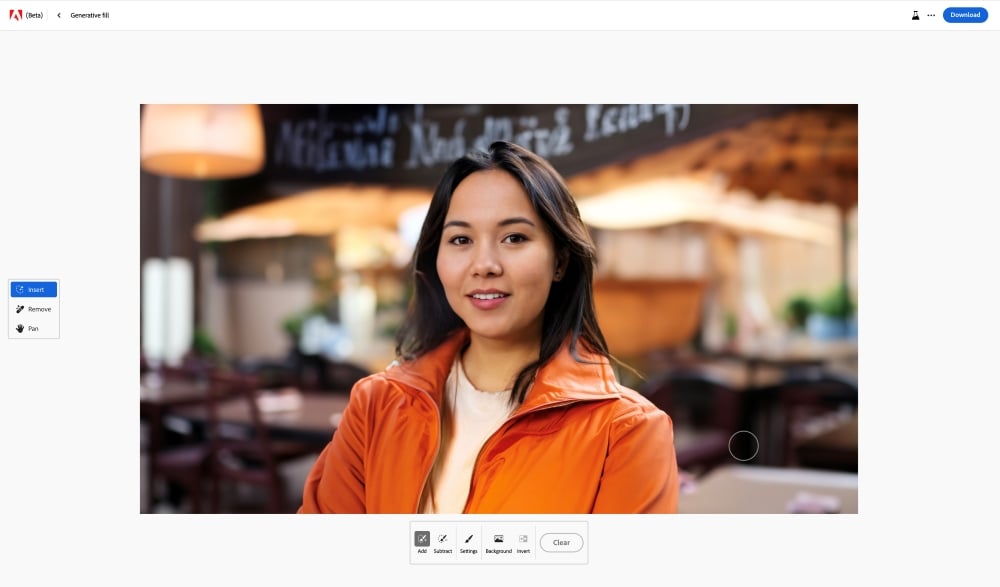

Here’s a sample image that Adobe provided. The woman is wearing an orange jacket and standing in a restaurant. Her portrait has been taken with a shallow depth of field, that’s why the background is blurry, and she is sharp.

You can use the Insert tool to highlight her clothes and describe a new look. The line “A black cocktail dress” is entered into the search box.

Almost instantly, Firefly puts her in a dress appropriate for the evening. Several options are provided. The first one wasn’t great, but this option with the necklace should work.

Changing out the background is just as easy. Click the “Background” button and enter a prompt, like “a cocktail bar.”

And Firefly delivers an appropriate background, with a bit of an awkward attempt at a hand holding a glass with a clutch purse attached.

Is this image going to go up on a billboard anytime soon? Probably not. Could a professional Photoshop artist do it better? Or course. But there will be plenty of uses for this level of imagery. And as time goes on, the AI will keep improving.

Text to Image

The next tool that Adobe offers is called “Text to Image.” You can describe a scene and see what comes up. Just for fun, let’s go across the room and see what Adobe gives us for “a man holding a drink wearing a black suit in a cocktail bar.”

And a dashing selection of well-dressed gentlemen appear. And their hands don’t look too bad, just a little off. Maybe one of them would be a good match for our lady above.

Text effects

Firefly’s next tool lets you experiment with some crazy text effects. In this example, The word “Yum” is filled with a 3D pattern of “Mediterranean cuisine.” The sidebar shows you a bunch of different options like Snake, Ballon or Bread Toast. You can change the background color and then copy and paste the image for use elsewhere.

There are also ways to produce variations on the text effects. For instance, you can change the “fit” from medium to loose. Now you can see how the design spills outside of the letters.

Generative recolor

Adobe has made one more tool available in the Firefly web demo. It’s called “Generative recolor.” You upload an SVG (Scaleable Vector Graphic). And then, you can choose from several tools that allow you to rework the color pallet.

You can choose from the suggested themes or use text to create your own. Additionally, you can select the “harmony” of the color palette, like complementary or triad.

Firefly for video

Firefly is still in beta and focused on still images, but here’s a look at what they have coming for video. There’s an exciting set of features on the way for video editors and motion graphics artists.

Depth

Adobe demonstrated the ability to add depth to an image with a prompt, “A sunlit living room with modern furniture and a large window.”

Firefly then scans the image and appears to understand the dimensional aspects of the space.

This allows for the image to be shown with multiple styling options. I could see this kind of tool being used by production designers to create looks for shoots.

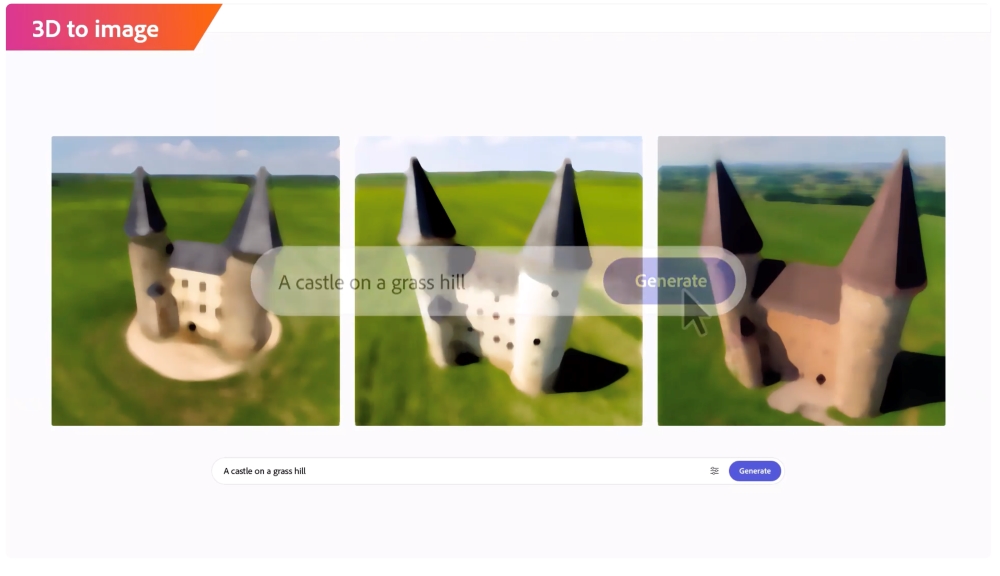

3D to image

Adobe demonstrates how Firefly will go beyond understanding 3D depth in 2D images. Firefly will actually be able to make 3D objects to place in your scenes.

In this example, they show how a 3D object of a castle can be composited into a generated scene with a prompt.

And then that same model could be restyled into a “castle dessert.” Firefly changes the appearance of both the model and the scene. It understands the context for desserts might be a plate or picnic table.

Conversational editing

Most of the time, the initial image that you get from AI won’t be exactly what you need. But if you refresh the search, you end up back at square one. Conversational editing allows you to keep tweaking the image until you get what you want by “texting” your image. You can become the ultimate annoying client, and your AI designer will never grumble.

The sequence starts with the image of a dog.

The first prompt is to dress the dog in a Santa suit.

And that’s followed by a request to put him in front of a gingerbread house.

Unlimited iterations

Unlimited, instant iterations of artwork have the potential for absolute chaos when it comes to creative deliverables. The mind boggles at the revision requests graphic artists will endure. And then, once they have shipped their work, clients will go to work texting the image to change it further.

VFX artists on “Spider-verse” talked about the cycle of revisions and the long hours that went with that. The executive responded to their concerns: “I guess; welcome to making a movie.” On one side, artists fear losing their jobs to AI. But there is a disconcerting intermediate step. It will be so easy to change art that one’s artistic intent may not be reflected in the “final” project. This may have a chilling effect on people’s desire to enter the arts in a professional capacity. Nobody knows the future, but we know that it will look different than it does today.

Audio production

Adobe’s video showcased many advancements in video production. And they aren’t limited to images. Custom music and soundtracks will be incorporated into Adobe’s tools.

Sound effects based on items in the images will automatically be created. Firefly will understand the elements in your images and suggest appropriate sound effects.

The “effect” this will have on the stock music and stock sound effects industries will be monumental. We’ve already seen many AI tools that can help with voice isolation and noise reduction. Currently, editors subscribe to music and sound effects websites. If Adobe builds AI tools into their video editing apps that automatically suggest sound effects and music from libraries, those sites will have a major uphill climb getting people to purchase music and sound effects files that don’t adapt to the duration of their timelines.

Video Editing

The Firefly engine looks like it will take a significant amount of grunt work out of video editing. Adobe is building workflows to automatically insert B-roll based on the script or voice-over. It would only make sense that those b-roll clips could be generated by AI rather than limited to what you shot that day.

Adobe showed off automated storyboards and previs based on the script. Color grading and relighting based on text prompts. Captions and animated 3D text are just a prompt away.

The high end of the editing world may again coalesce around masters of the craft. However, the medium and low ends of the video editing world will undoubtedly shift, as creators will be able to craft films with nothing more than a keyboard.

Adobe’s goals

Rather than Firefly being a standalone product, Adobe wants to integrate it into its existing tools. We’ll see more generative AI tools throughout their products as they gradually eliminate formerly time-consuming tasks. Adobe has committed itself to an approach to AI that doesn’t steal work and avoids biases. They are putting in tools to help imagery avoid being scanned by AI bots. At the same time, governments like the UK are considering laws to label AI images, and AI sequences like Marvel’s Secret Invasion intro are seeing some backlash. Adobe knows its customers, so hopefully, it can walk that fine line of empowering creatives without displacing them.

Conclusion

Adobe’s vision for the Firefly engine is technically ambitious and thrilling for anyone who wants to create. The desire to turn our words into worlds is as old as time itself. The result may be an explosion of creativity, or it may end up being a mountain of uncanny images. But one thing is for certain; this is just the spark of the AI revolution.

MediaSilo

allows for easy management of your media files, seamless collaboration for critical feedback, and out-of-the-box synchronization with your timeline for efficient changes. See how MediaSilo is powering modern post-production workflows with a 14-day free trial.